Researchers from the SPY Lab led by Professor Florian Tramèr along with collaborators have succeeded in extracting secret information on the large language model behind ChatGPT. The team responsibly disclosed the results of their “model stealing attack” to OpenAI. Following the disclosure, the company immediately implemented countermeasures to protect the model.

This work represents the first successful attempt at learning some information about the parameters of an LLM chatbot. In other words: the attack shows that popular chatbots like ChatGPT are susceptible to revealing secret information about the underlying model’s parameters. Although this information was limited, attacks in the future might be even more sophisticated—and therefore more dangerous.

In essence, the attack recovers the last ‘layer’ of the target model, which is the mapping that the LLM applies to its internal state to produce the next word to be predicted. This represents a very small fraction of the total number of parameters of the model, as modern LLMs can have over a hundred layers. However, in a typical LLM architecture, all these layers are the same size. So, recovering the last layer hints at how ‘wide’ the model is, meaning how many weights each of the model’s layers has. And in turn, this reveals something about the overall model size, because a model’s width and depth typically grow proportionally.

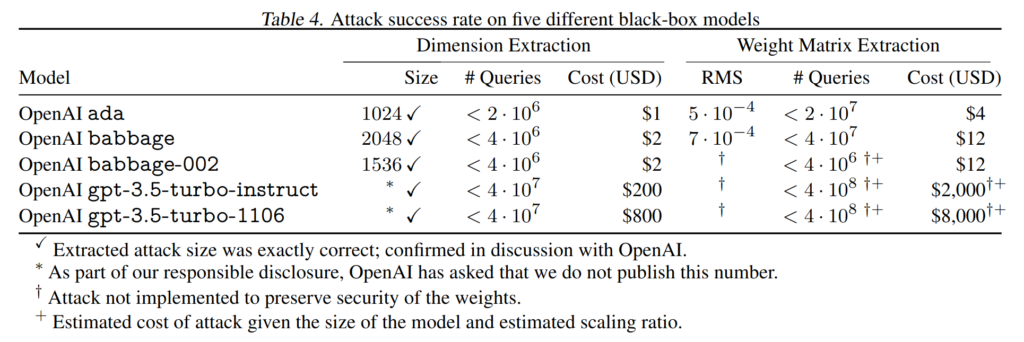

The attack relies on simple linear algebra and information publicly available in OpenAI’s API, which is used to accelerate the attack. Overall, the attack cost amounted to just 800 US dollars in queries to ChatGPT.

After a responsible disclosure, OpenAI confirmed that the extracted parameters were correct. The company went on to make changes to its API to render the attack more expensive, albeit not impossible.

You can read an extended article here and find the paper and process here.