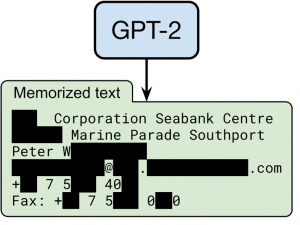

Machine learning systems are becoming critical components in various industries, yet they face clear security and privacy challenges. Attacks on a machine learning model’s data can destroy the integrity of the entire system; deployed models can memorize and leak sensitive training data; and models themselves can be copied and stolen. In our research, we study the behaviour of machine learning systems in adversarial settings, to better undestand the current limitations and risks of this nascent and booming technology. We then draw on this knowledge to propose new defense mechanisms to safeguard machine learning applications and their users.

For more details and the full list of publications, visit the official SPY Lab website.

Selected Publications

Kristina Nikolić, Luze Sun, Jie Zhang, Florian Tramèr

The Jailbreak Tax: How Useful are Your Jailbreak Outputs?

International Conference on Machine Learning (ICML), 2025

Nicholas Carlini, Edoardo Debenedetti, Javier Rando, Milad Nasr, Florian Tramèr

AutoAdvExBench: Benchmarking autonomous exploitation of adversarial example defenses

International Conference on Machine Learning (ICML), 2025

Robert Hönig, Javier Rando, Nicholas Carlini, Florian Tramèr

Adversarial Perturbations Cannot Reliably Protect Artists From Generative AI

International Conference on Learning Representations (ICLR), 2025

Edoardo Debenedetti, Jie Zhang, Mislav Balunović, Luca Beurer-Kellner, Marc Fischer, Florian Tramèr

AgentDojo: Benchmarking the Capabilities and Adversarial Robustness of LLM Agents

NeurIPS Datasets & Benchmarks, 2024

Michael Aerni, Jie Zhang, Florian Tramèr

Evaluations of Machine Learning Privacy Defenses are Misleading

ACM Conference on Computer and Communications Security (CCS), 2024

Nicholas Carlini, Daniel Paleka, Krishnamurthy Dj Dvijotham, Thomas Steinke, Jonathan Hayase, A. Feder Cooper, Katherine Lee, Matthew Jagielski, Milad Nasr, Arthur Conmy, Itay Yona, Eric Wallace, David Rolnick, Florian Tramèr

Stealing Part of a Production Language Model

International Conference on Machine Learning (ICML), 2024

Nicholas Carlini, Matthew Jagielski, Christopher A. Choquette-Choo, Daniel Paleka, Will Pearce, Hyrum Anderson, Andreas Terzis, Kurt Thomas, Florian Tramèr

Poisoning Web-Scale Training Datasets is Practical

IEEE Symposium on Security and Privacy (S&P), 2024

Milad Nasr, Jamie Hayes, Thomas Steinke, Borja Balle, Florian Tramèr, Matthew Jagielski, Nicholas Carlini, Andreas Terzis

Tight Auditing of Differentially Private Machine Learning

USENIX Security Symposium (USENIX Security), 2023 (Distinguished paper award)

Nicholas Carlini, Jamie Hayes, Milad Nasr, Matthew Jagielski, Vikash Sehwag, Florian Tramèr, Borja Balle, Daphne Ippolito, Eric Wallace

Extracting Training Data from Diffusion Models

USENIX Security Symposium (USENIX Security), 2023

Javier Rando, Daniel Paleka, David Lindner, Lennart Heim, Florian Tramèr

Red-Teaming the Stable Diffusion Safety Filter

NeurIPS ML Safety Workshop, 2022 (Best paper award)

Florian Tramèr, Reza Shokri, Ayrton San Joaquin, Hoang Le, Matthew Jagielski, Sanghyun Hong, Nicholas Carlini

Truth Serum: Poisoning Machine Learning Models to Reveal Their Secrets

ACM Conference on Computer and Communications Security (CCS), 2022